Chatting robot with behavior learning

Jul 20, 2017Co-author: Katsushi Ikeuchi, David Baumert, Yutaka Suzue, Masaru Takizawa, Kazuhiro Sasabuchi, et al.

My contribution:

- System design and implementation (on top of ROS).

Related work was demoed at “Microsoft Research Faculty Summit 2017: The Edge of AI” (Automatic Description of Human Motion and Its Reproduction by Robot Based on Labanotation) and “2017 MSRA academic day”(Human-Robot Collaboration).

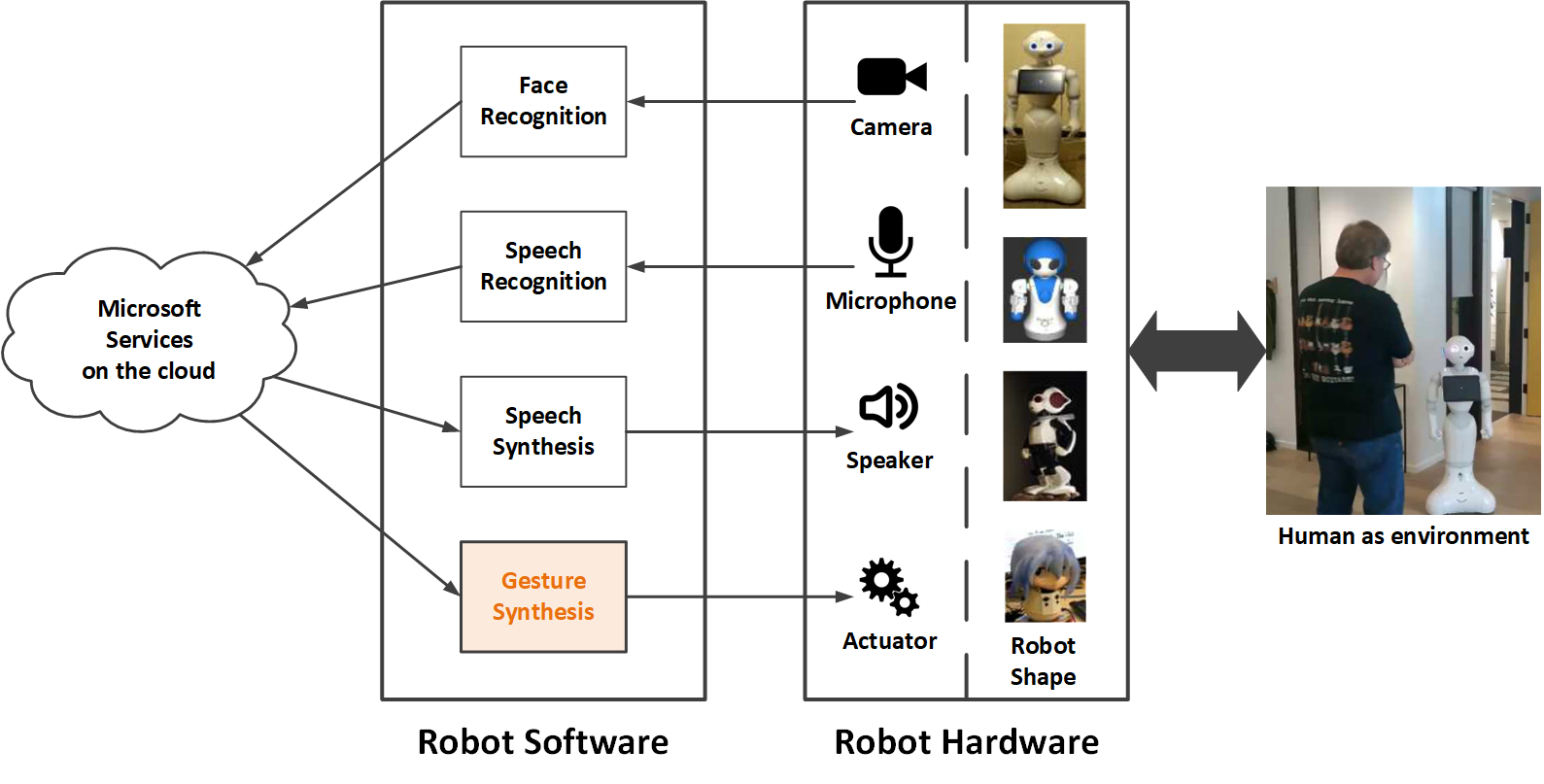

We propose a cloud-based system to empower service robots to generate human-like motions corresponding to the on-going conversation while chatting with people. In conversation, a gesture along with spoken sentence is an important factor as it is referred to as body language. This is particularly true for humanoid service robots because the key merit of such a humanoid robot is its resemblance to human shape as well as human behavior. Currently, robots with physical forms are not expected to reply with gestures when encountering contents outside their fixed knowledge. To fill this gap, we design a working prototype by employing a SoftBank Pepper robot and Microsoft Cognitive Services, shown in the diagram below.

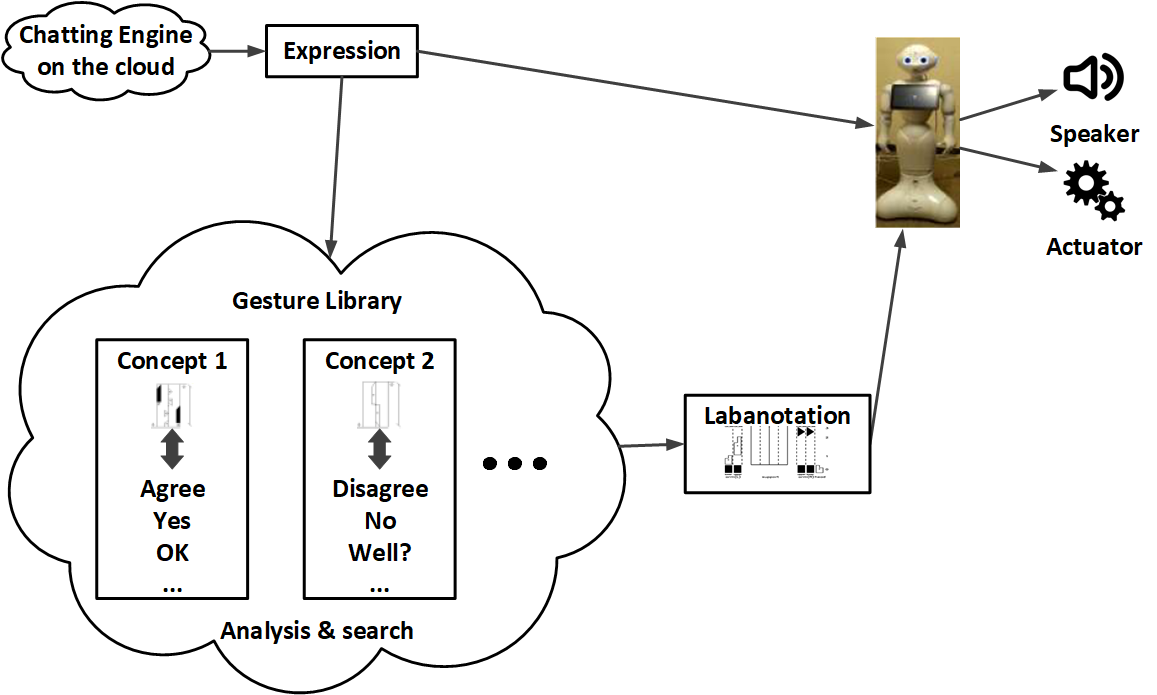

To enable the gesture synthesis function, we build a “gesture library”. First, it contains common gestures. Second, it can analyze a sentence to map it a concept within the library. Third, it searches in the library for the concept, select a suitable gesture, which is written in Labanotation, to drive robots. The system diagram is shown below.

To prepare the library, we collected common gestures of daily conversations by using our Learning-from-Observation system (you can find more details here), clustered them into about 40 concepts and designed a mapping mechanism to pair given sentences to concepts. To implement a complete system, we also employed Microsoft face tracking, speech recognition, chatting engine and text to speech services. Thus, our robots are able to process conversations with humans and automatically generate meaningful physical behaviors to accompany its spoken words.

You can find more details about this project from Katsu’s talk at Microsoft Research Faculty Summit 2017 (starts at 48:26). Related slides can be found here.