Model predictive control

Types of MPC

| MPC type | Detail |

|---|---|

| Linear MPC | |

| None-linear MPC | |

| Economic MPC | |

| Tube MPC | check paper Robust model predictive control using tubes |

| MPC with RL |

Key components:

| Item | Detail |

|---|---|

| 系统模型System model | describe the relationship between input and states |

| cost function | a function take input and states to get a cost,包括running cost(每一步的cost)和terminal cost(最终cost) |

| 限制条件 Constraints | the input and states should be within a range |

| 优化器 Optimizer | tune the input within all constraints to get the minimum (maximum) cost. |

Process

- The tuned input is a sequence, which predict into the future. But the system only take the first input for the next time step, measure new system states, and repeat the prediction to generate a new input.

Characteristics:

- Main drawabck: need of identifying the open-loop model offline

Linear MPC (CDC’19 workshop, slides 1)

Linear predict model:

- 与线性控制模型的关系:

若把线性控制模型写为

,那么下一状态为

Performance index (without constraint)

-

Performance index defined as:

-

Optimization:

Derivation

Performance with constraint

-

Add constraints to enforce:

由上可知两个条件均可以写为线性形式:

Tracking, disturbances, and delay

Tracking

Disturbances

-

Measured disturbance

-

Get QP:

Feasibility, convergence and Stability

Convergence

- Use the Value function

Linear time-varying MPC (CDC’19 slides No. 2a)

LPV (Linear parameter-varying) models

-

Linear, parameters change with time, with disturbance

-

Get QP:

LTV (linear time-varying) model

-

Model change over time (

-

The measure distubance is embedded in the model(?)

-

Get QP:

Non-linear MPC

Model:

-

Model

-

Constraints:

Performance index:

-

Performance index

-

Need to check what this is

Solver

-

Linearizing to get LPV/LTV model?

-

SQP? Sequential quadratic programming Sequential quadratic programming

Kalman filter fits here?!

Single shooting vs. multiple shooting (CDC’19 slides No. 2a, p14)

-

Single shooting: From

-

Multiple shooting: From

-

RTI Real time iteration

-

Comparison:

Multiple shooting Single shooting Unstable system better Initialization of states

at intermediate nodesbetter QP/NLP bigger

Economic MPC (CDC’19 slides No. 2b)

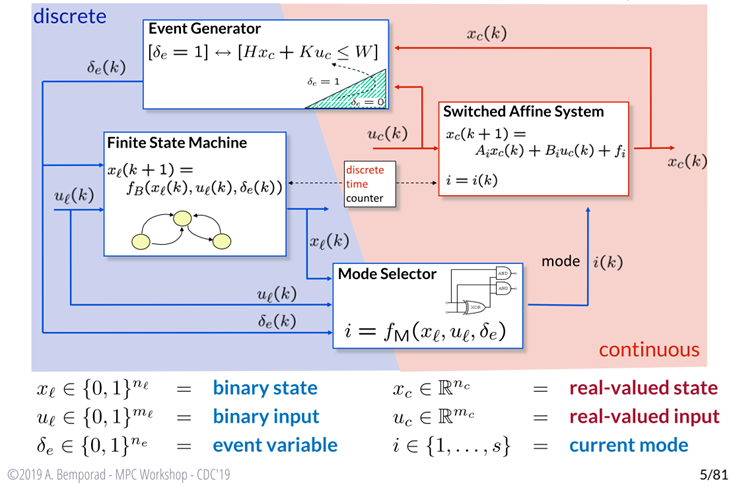

Hybrid and stochastic MPC (CDC’19 slides No. 3)

- If you have multiple mode?

- MIP

MPC with RL (CDC’19 slides No. 4)

| MPC | RL | |

|---|---|---|

| policy | optimal policy | |

| Value | LQR case: value为 | optimal value |

| Q-value | optimal action-value |

Reinforcement learning

Policy evaluation (include value evaluation/prediction and q evaluation/control)

-

Monte Carlo

- prediction

- control

- prediction

-

Temporal Difference

- prediction: TD(0)

- control: SARSA/Q-learning

SARSA:

- prediction: TD(0)

Policy optimization

-

Greedy policy updates

- Value evaluation: 用于model-based的optimization。

- Q evaluation:用于model-free的optimization。

- Value evaluation: 用于model-based的optimization。

-

-

Exploration vs. exploitation

- Greedy in the limit with infinite exploration, e.g.,

- Greedy in the limit with infinite exploration, e.g.,

Abstract/generalize

- Curse of dimensionality

- Function approximation (I think they combine this with the Q-learning part. Or maybe just use Q-learning as an example?)

Q-learning

- Curse of dimensionality

- Function approximation(Use MPC here) Ref Gros’ paper @ TAC2020

Policy search

MPC

MPC-based RL

- Learn the true Q-function with MPC Gros’ paper @ TAC2020

- Enforcing Safety

- Safe RL

- Safe Q-learning

- Safe Actor-Critic RL

- MPC sensitivies (Differentiate MPC?)

- Realtime NMPC and RL

- Mixed-Integer problems

Adding safty constraints

- Adding extra terms to quadratic function

Linear quadratic function optimazing the cost to minimium. By adding extra penalty to the cost function to make the cost

- Mixed-integer programming solver.

something to figure out

- Mixed-integer quadratic programming (MIQP)

- PWA piecewise affine approximation

Ref

** Model Predictive Control: from the Basics to Reinforcement Learning**

Sequential Linear Quadratic Optimal Control for Nonlinear Switched Systems Fast nonlinear Model Predictive Control for unified trajectory optimization and tracking